Table of Contents

Verbal Morality Statute Enforcer 2000

The VMSE 2000 is the newest iteration in a long standing series of verbal hate crime prevention devices. It is able to detect language violations in all languages for the region of purchase and works in a range of up to 6 meters, while being able to work in conjunction with other instances of the VMSE2000 to cover your available space and keep you safe from dreaded language violations.

Our analysts predict that the device integrate into everyone's way of life and be an accepted part of society no later than 2032. We are happy to provide you with our adaptation of how the future will look like through the influence of the VMSE 2000.

Detailed Specification

- The VMSE 2000 listens for speech input and detects verbal morality violations committed by maniacs currently in the device's proximity.

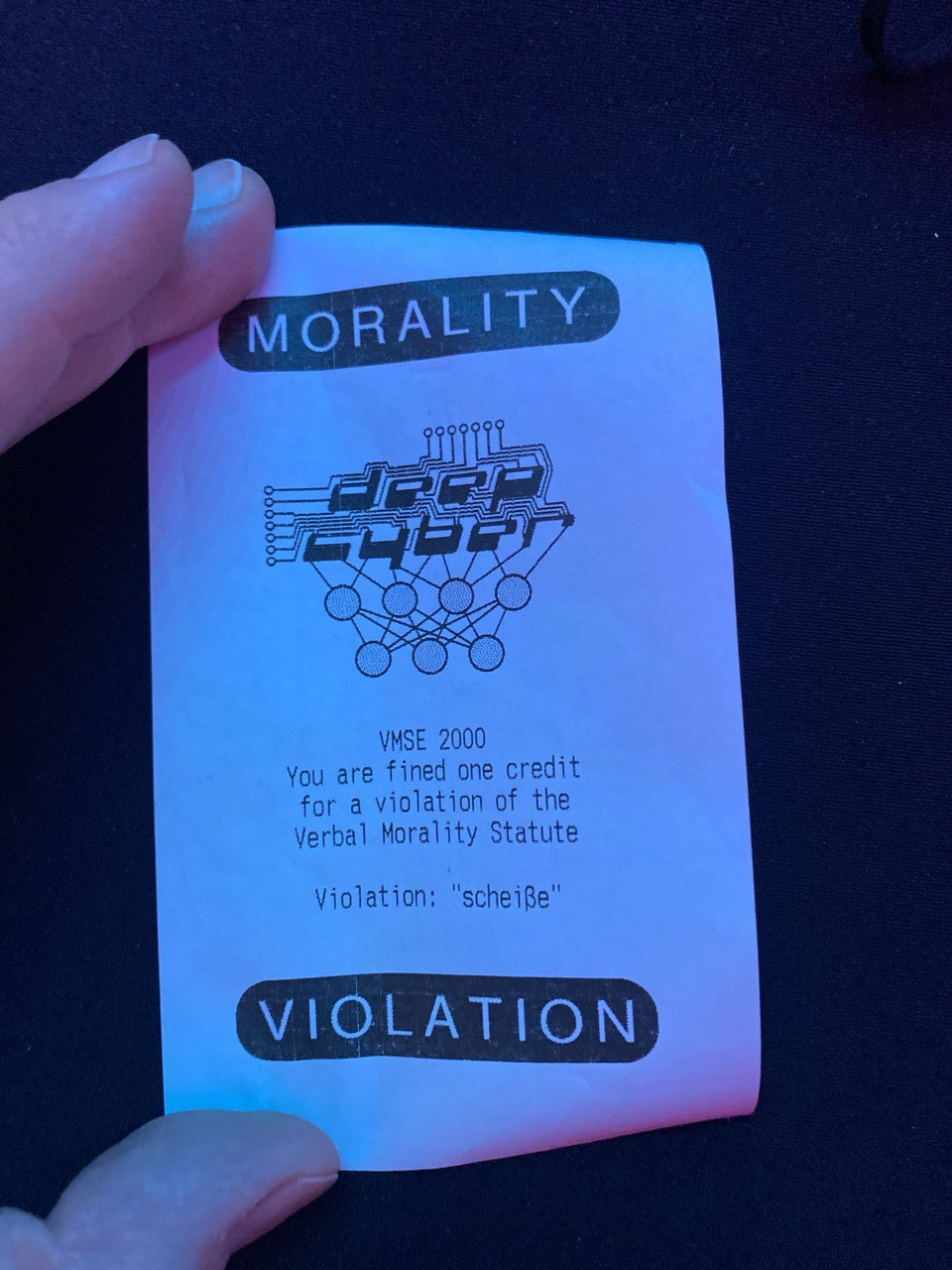

- Consequences of a detected language violation is a verbal notification as well as a printed receipt for a credit fine of a sum adequate to the severity of the individual violation.

- The morality violation is announced with a sharp warning tone guaranteeing attention to this crime, both by the maniac himself as well as society in general.

- The public display of the maniacs moral misdemeanour will apply social pressure on the maniac, leading to an adjustment of the subjects moral values.

- The continuous enforcement of the verbal morality statute by the VMSE 2000 will result in a better society for everyone's benefit.

- The VMSE 2000 emits a modern aura of morality with aesthetics inspired by the best designers of San Angeles.

Development

The VMSE 2000 is one of the most important projects by Deep Cyber, if not one of the most important efforts of our lifetime. While the physical parameters and technical details have been finalised in an early state of the development back in 2017, the component required for a reliable detection of violations proved do be much more complicated. The depth of Cyber necessary to overcome each and every obstacle on the way of fulfilling our goal, required a prolonged era of research. After six years of development we were finally able to present our fully functioning prototype to the public at the very end of 2023 on the perfect event for such a presentation: The Chaos Communication Congress, the 37C3.

Hardware

- Raspberry Pi 5 (4 also successfully tested)

- Thermal Printer (compatible with `python-escpos`)

- USB Audio Adapter

- PlayStation Eye USB camera for (taped-over CCD)

Software used

- OpenAI whisper model (base) prompted for your language of choice!

- OpenVINO for speeding up encoding

Source Code

- You can find the firmware / device glue at https://github.com/deepestcyber/vmse2000-firmware/.

- The voice detection part can be found at https://github.com/deepestcyber/vmse2000-detector/.

Previous Iterations

There were a lot of iterations to get to this result (and a lot of non-development as well - this project started in 2017 after all). We tested DeepSpeech, DeepSpeech V2, RNN on DeepSpeech 2 feature extractors and binary classification RNNs trained from scratch. In the end the simplest and most robust model was OpenAI whisper. Our suspicion is that the amount of data, it's variance and the resulting robustness to noise (microphone as well as background) is what makes the difference.